AI in healthcare is revolutionizing the way we approach medical challenges and innovations. This transformative technology has the potential to enhance drug testing methodologies, ensuring faster and more efficient results, while simultaneously addressing crucial aspects like vaccine safety through AI-driven analysis. The integration of AI technologies, such as the proposed VAERS AI integration, aims to improve monitoring and tracking of vaccine-related adverse events. As we witness the unfolding of the AI revolution in medicine, it is crucial to examine both the promises and potential pitfalls that accompany such rapid advancements. The recent discourse around RFK Jr.’s AI plan highlights ongoing debates about the future of healthcare and the ethical implications surrounding AI applications in this critical sector.

The use of artificial intelligence in the medical field signifies a paradigm shift in health technology, promising to enhance processes and outcomes across various applications. This evolution encompasses not only the acceleration of drug approval through innovative AI drug testing methods but also increased vigilance in monitoring public health initiatives, such as vaccine administration and their safety profiles. As discussions around the role of machine learning in medicine gain momentum, alternative terms like computational health analytics and intelligent healthcare systems help convey the breadth of this transformation. In a world increasingly shaped by digital tools and algorithms, understanding and navigating the complexities of AI’s impact on medical practices is essential. The dialogue surrounding these advancements brings forth questions about trust, efficacy, and the future trajectory of health management.

The Impact of AI in Healthcare

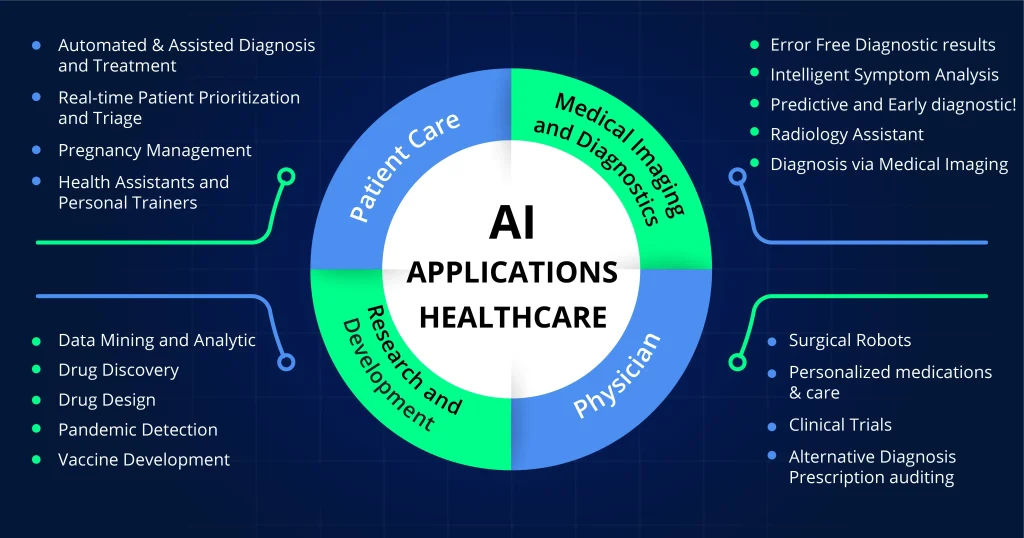

The infusion of artificial intelligence (AI) into healthcare has sparked excitement about potential breakthroughs, but it also raises significant concerns. As prominent figures like RFK Jr. advocate for increased AI integration in medical systems, the ramifications of such a shift must be critically evaluated. AI functionalities such as data analysis, predictive modeling, and automated decision-making can seemingly accelerate processes in healthcare, from drug testing to patient outcome evaluations. Yet, this rapid advancement also necessitates a cautious approach to ensure that AI does not undermine the integrity of healthcare practices.

Moreover, AI’s role in monitoring vaccine safety through systems like VAERS is particularly contentious. While AI can enhance data analysis for vaccine-related adverse events, the accuracy of such methodologies relies heavily on the quality of information inputted into these systems. If AI is used to further specific narratives or agendas, such as discrediting vaccines or distorting safety data, the consequences for public health could be dire. It is essential to balance innovation with rigorous oversight to safeguard the interests of patients and public trust in medical interventions.

AI Drug Testing: A Double-Edged Sword

AI drug testing promises to revolutionize pharmaceuticals by streamlining drug discovery and approval processes. Proponents argue that AI can analyze vast libraries of molecular data quicker and more efficiently than human researchers, potentially leading to breakthrough treatments in record times. This could significantly reduce the reliance on traditional animal testing, which has long been criticized for ethical and efficacy concerns. However, as RFK Jr. plans to leverage AI in drug testing, skepticism arises regarding whether these technologies can truly replace or just supplement traditional methodologies.

Critics caution that overstating the capabilities of AI might lead to dangerous assumptions about its effectiveness. While AI models can augment the drug testing landscape, relying wholly on them without the necessary human oversight could result in overlooking critical safety signals. Importantly, the integrity of AI-driven predictions about drug safety must be maintained by ensuring that diverse datasets are used in training algorithms to avoid bias and misrepresentation. Prematurely escalating AI drug testing could have severe consequences on public safety and undermine scientific credibility.

Vaccine Safety and AI Integration

Incorporating AI into vaccine safety monitoring, particularly through mechanisms like VAERS, presents a conceptual leap that could alter how vaccine-related data is interpreted. Advocates for AI-integrated systems argue that AI could enhance the speed and effectiveness of detecting adverse events associated with vaccinations. However, critics highlight that this approach is fraught with challenges, particularly regarding data accuracy and misinterpretation. Historical misuse of VAERS data by anti-vaccine activists underscores the potential dangers surrounding AI integration, wherein biased AI systems could perpetuate misinformation rather than clarify vaccine safety.

AI’s capacity to identify patterns within large datasets hinges on the quality and variety of the input data. If an AI system is designed to validate preconceived notions about vaccine safety — such as associating vaccines with unrelated health incidents — it could mislead both researchers and policymakers. The medical community recognizes the need for robust systems that prioritize data integrity and transparency. Training AI systems with comprehensive, unbiased datasets is essential to ensure that the conclusions drawn about vaccine safety uphold scientific rigor.

The Risks of AI in Health Policy

As discussions surrounding the intersection of AI and health policy intensify, the potential for misuse in public health decision-making becomes critically evident. RFK Jr.’s proposition to overhaul systems like VAERS with AI suggests a desire to automate the evaluation of vaccine safety reports. Yet, the implications of such a shift warrant careful scrutiny. Integrating AI into health policy not only requires technical sophistication but also an ethical framework to prevent the manipulation of data that could foster public distrust in vaccines.

The misuse of AI tools in interpreting health data could exacerbate existing biases and lead to harmful consequences for vaccination programs and public health initiatives. Without a rigorous understanding of AI capabilities, health decisions may become reactive instead of evidence-based. As AI continues to evolve, the emphasis on accountability, transparency, and ethical decision-making in the integration of AI into health policies will be paramount. Establishing a multidisciplinary approach that engages scientists, ethicists, and policymakers can help navigate this complex landscape.

AI Revolution in Medicine: Myths vs. Reality

The term ‘AI revolution in medicine’ can evoke images of a futuristic healthcare landscape where machines efficiently manage patient care. However, it’s crucial to demystify the capabilities of AI and its limitations in the medical field. While AI can support diagnostics and treatment planning, the reality is that human expertise remains irreplaceable. Decisions regarding patient care involve nuanced understanding that AI cannot replicate, particularly in contexts laden with ethical considerations.

Moreover, the narrative pushed by certain advocates, such as RFK Jr., risks overshadowing the essential need for collaborative human oversight in medical applications of AI. The notion that AI can entirely take over the medical decision-making process is fundamentally flawed. As we stand on the precipice of a new wave in healthcare technology, a balanced discourse emphasizing both the potential and the limitations of AI in medicine is vital to foster trust and efficacy in healthcare practices.

AI Tools and Drug Safety: Ensuring Accountability

The introduction of AI tools in drug safety assessments could signal a transformative period in pharmaceuticals, aligning with the goal of expediting drug approvals while ensuring safety. By harnessing AI, pharmaceutical companies can analyze outcomes more efficiently and gain insights into adverse effects, thus prioritizing patient welfare. However, the reliance on AI must be accompanied by stringent regulations to hold drug makers accountable for the outcomes resulting from their AI-enhanced processes.

With RFK Jr.’s push for AI systems in drug safety, there is a tangible risk that transparency could be compromised in favor of speed and cost-effectiveness. Pharmaceutical companies may leverage the complexities of AI to obscure potential risks associated with their products. Ensuring that AI tools are utilized responsibly and ethically requires a framework of transparency, rigorous oversight, and regular audits to ensure compliance with safety standards. Prioritizing accountability in AI-driven drug safety assessments can ultimately safeguard public health.

Ethics of AI in Vaccine Reporting

The ethics surrounding the utilization of AI in vaccine reporting frameworks like VAERS cannot be overstated. As AI is considered for integration into systems designed to evaluate vaccine safety, ensuring ethical standards is paramount. There is a critical need for guidelines that govern data handling, interpretation, and the overall use of AI-generated insights. Protecting public trust in vaccine safety requires a steadfast commitment to ethical practices in how AI influences reporting and vaccine-related health outcomes.

The possibility of bias in AI algorithms poses a substantial risk to the objectivity required in vaccine safety evaluations. If AI systems are trained on data that reflects preconceived biases or misinformation, they could falsely validate harmful narratives. To mitigate this, it is vital to establish ethical oversight that includes diverse stakeholder input, transparency in AI operations, and rigorous checks to prevent misuse. Careful consideration of ethical implications will ensure that AI serves as a force for good in public health, rather than undermining vaccine confidence.

Navigating the Future of AI in Health

As we look forward to the future of AI in healthcare, strategic planning and careful navigation will dictate whether this tool predominantly serves the best interests of public health. Policymakers, scientists, and healthcare professionals must collaborate to ensure that innovations align with ethical standards, scientific rigor, and community trust. The goals should encompass improved health outcomes while protecting against the potential pitfalls associated with disinformation or misuse of AI tools.

With leaders like RFK Jr. proposing expansive AI initiatives, the landscape will necessitate vigilance to ensure that technology enhances rather than undermines fundamental health principles. It will be important to foster a collaborative environment where AI advancements are carefully scrutinized, validated, and transparently communicated to maintain public confidence in healthcare systems.

The Broader Implications of AI Technology in Medicine

The integration of AI technology in medicine extends beyond mere efficiency; it reshapes the entire healthcare ecosystem. As we embrace new digital tools, it is essential to consider implications for patient care, public trust, and ethical practices. AI has the potential not only to revolutionize how healthcare is delivered but also poses challenges that must be addressed to avoid eroding trust in medical systems. The societal implications of these technologies call for open dialogues about the benefits and risks involved.

Furthermore, as AI continues to impact drug testing, vaccine safety, and health policy, it is crucial for stakeholders to engage in continuous education about its complexities. Developing a comprehensive understanding of AI’s capabilities will empower healthcare providers and patients alike to navigate this evolving landscape. Encouraging public discourse on AI will help demystify these technologies and promote informed decision-making in healthcare settings, ultimately benefiting society as a whole.

Frequently Asked Questions

What is the role of AI in healthcare according to RFK Jr.’s plan?

RFK Jr. proposes that Artificial Intelligence (AI) will be integral in the US Department of Health and Human Services (HHS), focusing on maximizing efficiency in decision-making, drug approval processes, and even vaccine safety monitoring. He envisions AI leveraging vast datasets (‘mega data’) to enhance healthcare interventions.

How can AI impact drug testing processes in healthcare?

AI is believed to potentially revolutionize drug testing by accelerating the drug approval process. Kennedy’s plan includes phasing out traditional animal testing in favor of AI models, which could use computational approaches and existing safety data to evaluate new pharmaceuticals more efficiently.

What are the implications of integrating AI into the Vaccine Adverse Event Reporting System (VAERS)?

The integration of AI into VAERS could streamline the tracking of vaccine side effects and risks. However, concerns arise about the accuracy of the AI’s interpretations, especially if it is trained on flawed data or used to promote specific agendas regarding vaccine safety.

Is AI drug testing a reliable alternative to animal testing in healthcare?

While AI drug testing offers new avenues for research and development, experts suggest that it cannot fully replace animal testing for biomedical research at this stage. The consensus emphasizes that AI should supplement traditional methods rather than wholly replace them due to the complexities of biological systems.

What challenges does AI face in ensuring vaccine safety assessments?

Implementing AI in vaccine safety assessments presents unique challenges, including data bias, privacy issues, and the potential for user manipulation. AI systems must be rigorously vetted to ensure they avoid false conclusions about vaccine safety based on skewed or incomplete data.

How essential is expert oversight in AI applications in healthcare?

Expert oversight remains critical in AI applications within healthcare. To prevent misinterpretations and safeguard against biases, robust frameworks and regulations must be established to ensure that AI system outputs align with scientific consensus and public health standards.

What ethical considerations surround AI’s use in evaluating vaccine safety?

Ethical considerations include the risk of misrepresentation of data through AI, potential violation of user privacy, and the need to maintain public trust in health interventions. Transparency in AI algorithms and data sources is crucial to avoid undermining confidence in vaccines.

What are the risks of relying solely on AI in health decision-making?

Relying solely on AI in health decision-making poses risks such as overfitting to biased data, leading to incorrect conclusions, and the potential for lack of accountability. It’s vital that AI serves as a tool to support human expertise rather than as a replacement.

How might RFK Jr.’s vision for AI in healthcare influence public perception of vaccines?

RFK Jr.’s vision may polarize public perception, particularly if AI results are perceived to validate pre-existing anti-vaccine sentiments. Effective communication regarding the limitations and proper use of AI will be essential to ensure that it is not misused to promote vaccine misinformation.

| Key Points |

|---|

| RFK Jr. promotes the use of AI in health services, expressing distrust in established science and experts. |

| The current Vaccine Adverse Event Reporting System (VAERS) may be overhauled with AI, causing potential misinformation and distrust. |

| AI may be used to replace animal testing in drug approval processes, yet concerns remain about the lack of complete data reliability. |

| Experts warn against the careless adoption of AI in healthcare due to biases, legal challenges, and privacy concerns. |

Summary

AI in healthcare has the potential to revolutionize patient care and drug testing processes, but caution is essential. RFK Jr.’s recent proposals to integrate AI heavily into health policies raise concerns about misinformation and potential bias. While AI can contribute valuable efficiencies and insights, it is crucial to ensure these technologies are developed with rigorous standards to avoid jeopardizing public trust in healthcare.