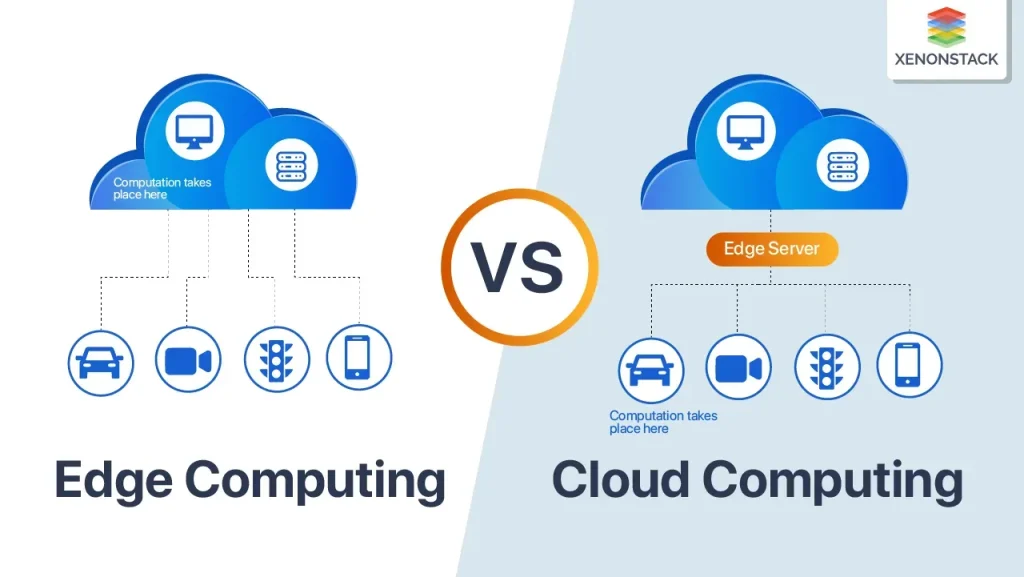

Cloud vs Edge Computing is a pivotal consideration for modern organizations seeking performance, cost efficiency, and security in a connected world, where executives weigh the speed of local decision-making against centralized analytics, governance, and scale offered by cloud-native platforms. As data volumes explode from devices, sensors, and applications across industries, teams must decide where to run workloads, how to move data efficiently, and which parts of the IT stack belong in the cloud or at the edge, weighing factors like latency, bandwidth, governance, data sovereignty, operational resilience, and cost of ownership across distributed environments. The right strategy often blends both approaches, leveraging cloud scalability and edge processing to combine centralized analytics with near-data computation, local autonomy, offline capabilities, and real-time decisioning at the source, while maintaining clear data flows, consistent security controls, and auditable governance across locations, highlighting edge computing advantages such as immediacy and locality. This article compares the strengths and tradeoffs of cloud-centric versus edge-centric models and related paradigms, and provides practical guidance to design a resilient hybrid cloud strategy that aligns with business goals, regulatory requirements, and security posture. By highlighting edge computing use cases, industry patterns, and decision frameworks, we set the stage for a sustainable cloud-edge continuum that improves responsiveness, reduces costs, and accelerates innovation, while keeping governance robust and adaptable to changing circumstances.

Viewed through an alternative lens, this evolution is often described as distributed computing that pushes processing toward the network edge via edge-native deployments, perimeter analytics, or fog-like architectures. These terms emphasize bringing computation closer to data sources, reducing latency, and enabling offline operation when connectivity is intermittent. In practice, teams blend centralized cloud capabilities with near-data processing to create a hybrid model—sometimes called a perimeter, fog, or edge-assisted architecture—that preserves governance while speeding decisions. Understanding these related concepts helps architects design scalable, resilient systems that balance immediacy with broad analytics and regulatory compliance.

Cloud vs Edge Computing: Designing a Hybrid Cloud Strategy for Real-Time and Analytics

Understanding Cloud vs Edge Computing is more than a technology choice; it’s a strategic decision that shapes performance, cost, security, and speed of innovation for modern organizations. Framing the problem through a hybrid cloud strategy—blending cloud computing vs edge computing—lets teams place workloads where they run most efficiently. Cloud scalability enables centralized analytics and global governance, while edge processing brings latency-sensitive decisions closer to data sources and reduces bandwidth needs.

Edge computing advantages become evident when milliseconds matter or data must remain local. Edge processing minimizes round trips to the cloud, supports offline operation, and enhances privacy by keeping sensitive information on-site. A smart hybrid cloud strategy coordinates workloads across edge gateways, local servers, and cloud services, selecting the right environment based on latency, data sensitivity, and business value. For many organizations, success comes from real-time decisions at the edge paired with cloud analytics, model updates, and governance that unify security and compliance.

Edge Computing Use Cases: Real-Time Insights and Business Value

Edge computing use cases span manufacturing floors, healthcare devices, smart cities, and retail points of presence. In manufacturing, edge-enabled sensing enables real-time monitoring, fault detection, and autonomous control loops that reduce downtime and waste. In healthcare, local processing supports immediate alerts and privacy-preserving data handling, while de-identified aggregates flow to the cloud for trends and research. These edge computing use cases illustrate how latency-sensitive processing unlocks value at the source.

To scale these edge computing use cases, design for interoperability with standard APIs, containerized workloads, and edge orchestration that can run across on-premises and cloud environments. Data preprocessing at the edge reduces data volumes sent upstream, while the cloud delivers powerful analytics, ML model training, and centralized governance. This balance—leveraging cloud scalability and edge processing—forms a practical hybrid architecture that preserves near-real-time insight while enabling enterprise-wide oversight and compliance.

Frequently Asked Questions

What is the difference between cloud computing vs edge computing, and how should this influence a hybrid cloud strategy?

Cloud computing vs edge computing describes where compute happens and how latency, bandwidth, and data governance are handled. In cloud computing, compute and storage are centralized in data centers for scalable analytics and global access; edge computing pushes processing closer to the data source to reduce latency and enable offline operation—this highlights edge computing advantages such as real-time decisions and data locality. In a hybrid cloud strategy, route workloads by latency, data sensitivity, and bandwidth: perform time-critical processing at the edge, and move heavy analytics, model training, and governance to the cloud. Preprocessing at the edge can reduce cloud data transfer, while orchestration and consistent security and monitoring tie both environments together.

What are edge computing use cases and how do they relate to cloud scalability and edge processing in a hybrid cloud strategy?

Edge computing use cases span manufacturing floors, healthcare devices, smart cities, and retail points of presence where milliseconds matter. These use cases enable real-time monitoring, automated control, offline operation, and privacy-preserving data handling, reducing bandwidth to the cloud. In a hybrid cloud strategy, align edge processing for immediate decisions with cloud scalability and edge processing for heavy analytics, AI model training, and governance—pull aggregated insights to the cloud while sustaining local execution. Invest in interoperable platforms, standard APIs, and unified security to maintain visibility and control across both environments.

| Topic | Key Points |

|---|---|

| Overview / Definition | Cloud vs Edge Computing describes a strategic decision blending centralized cloud capabilities with localized edge processing. Organizations often blend both to optimize performance, cost, security, and innovation velocity. |

| Landscape and Scope | Cloud centralizes compute, storage, and services in data centers or cloud regions; Edge pushes processing near data sources (gateways, local servers, micro data centers) to reduce latency and enable real-time decisions. |

| Key Terms | Cloud-enabled workloads for scale and centralized analytics; Edge for real-time processing and data locality; hybrid strategies combine both. |

| Location of Compute and Data | Cloud: remote data centers; Edge: near the data source for real-time insights and offline operation. |

| Latency & Bandwidth | Cloud tolerates higher latency with massive compute; Edge minimizes latency by avoiding cloud round-trips for time-critical decisions. |

| Security & Compliance | Cloud provides mature security and centralized governance; Edge expands the attack surface but can improve data privacy by localizing sensitive data. |

| Cost & TCO | Cloud costs depend on usage and data movement; Edge requires upfront device/infrastructure capex but can reduce ongoing bandwidth costs; Hybrid often yields optimal TCO. |

| Architecture Models | Cloud-first: centralized data lakes and cloud-based analytics; Edge-first: compute at the edge with local orchestration; Hybrid: a split with synchronized data and governance. |

| Use Cases & Synergy | Edge excels where milliseconds matter (industrial automation, autonomous systems); Cloud provides analytics, AI training, and governance; Together enable end-to-end capabilities. |

| Guidelines for Hybrid Strategy | Assess data gravity, latency requirements, security needs; design for resilience and interoperability; establish governance across cloud and edge. |

Summary

Cloud vs Edge Computing is a strategic choice that blends centralized cloud capabilities with localized, real-time edge processing to optimize performance, cost, and governance. A well-designed hybrid approach leverages edge for latency-sensitive tasks and cloud for scalable analytics and centralized management. By evaluating data gravity, latency needs, and security requirements, organizations can build resilient architectures that deliver real-time insights at the edge while maintaining scalable analytics in the cloud.