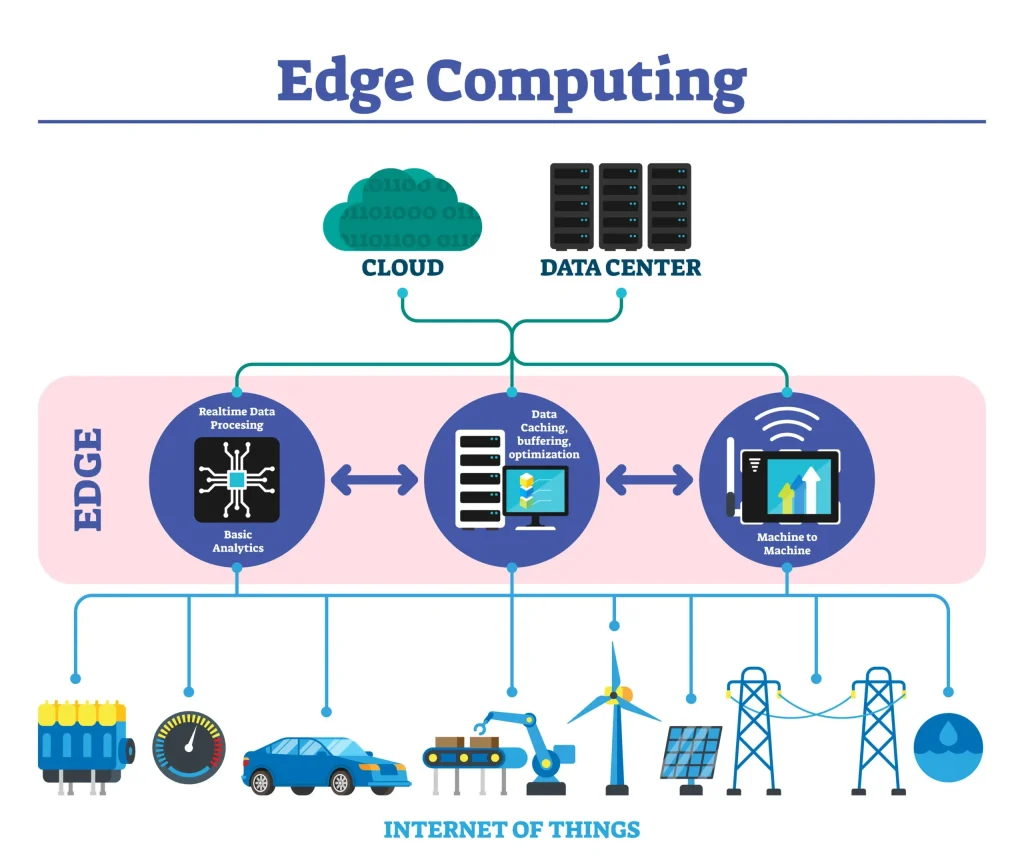

Edge computing is a paradigm that pushes data processing closer to the data source, such as sensors, devices, and network gateways, instead of sending everything to a centralized cloud. This shift toward processing at the edge can dramatically reduce latency, conserve bandwidth, and improve privacy, delivering benefits like faster decisions and more reliable operations. As organizations deploy more Internet of Things (IoT) devices, autonomous systems, and mobile applications, edge vs cloud architectures become practical for delivering fast, reliable experiences. Edge devices and gateways enable low latency processing by handling data locally, which helps applications react in real time. In this era of connected intelligence, adopting a near-data approach can reduce exposure to networks while maintaining security and governance.

To introduce the concept with closer semantic ties, think of distributed computing at the network edge, where processing happens near data sources. Alternative terms such as near-data processing, edge analytics, or fringe computing describe the same idea from different angles. You might also encounter patterns like fog computing or on-device AI that emphasize local orchestration, privacy, and resilience. In practice, these LSIs help teams design architectures that optimize latency, reduce bandwidth use, and maintain operational continuity even when connectivity is limited.

Edge Computing Benefits and Use Cases: Real-Time Value with Low Latency Edge Computing

Edge computing brings compute, storage, and intelligence closer to the data source—on edge devices, gateways, and nearby edge servers—delivering tangible edge computing benefits such as reduced latency, bandwidth optimization, and enhanced privacy. By processing data locally, organizations can generate insights and trigger actions within milliseconds, enabling real-time analytics, immediate control of automation, and safer autonomous systems even when connectivity to the cloud is limited.

Edge computing use cases span industries where decisions must be made near the source. In manufacturing, predictive maintenance and quality control rely on edge analytics for near-instant anomaly detection. In transportation, autonomous vehicles and drones process sensor data at the edge to navigate with minimal reliance on remote servers. Other examples include healthcare device monitoring, in-store retail analytics, and live content delivery—each illustrating how edge devices and gateways support low latency edge computing, reduce data transfer, and preserve privacy.

Edge vs Cloud and Hybrid Architectures: Maximizing Low Latency Edge Computing and Scalable AI

Edge vs Cloud: These are not competing choices but complementary layers in a hybrid IT architecture. The cloud handles heavy computation, long-term storage, and centralized AI training, while the edge delivers fast, local processing, data reduction, and offline operation. Embracing the edge alongside cloud—an edge-first, then scale to the cloud approach—creates a robust strategy that favors low latency edge computing for time-sensitive workloads and improves resilience.

Implementation guidance for a successful hybrid setup starts with a pilot: pick a single edge use case, define latency and bandwidth targets, and evaluate connectivity constraints. Consider edge devices, gateways, and MEC to distribute workloads, and plan security and management upfront, including secure boot, encryption, and zero-trust access. Use architectural patterns such as edge-native analytics, tiered data processing, and federated learning to balance responsiveness with the cloud’s scalability.

Frequently Asked Questions

What are the key edge computing benefits, and how does low latency edge computing enable real-time decision-making?

Edge computing moves compute, storage, and intelligence closer to data sources like sensors and devices, delivering latency reduction, bandwidth savings, and enhanced privacy. By processing data at the edge, organizations can achieve real-time analytics and control, even during intermittent connectivity. In practice, applications such as industrial automation, robotics, and intelligent video surveillance require decisions within tens of milliseconds—enabled by low latency edge computing. The cloud remains for heavier processing and orchestration, while the edge handles time-sensitive work.

How does edge vs cloud architecture compare, and what roles do edge devices play in common edge computing use cases?

Edge computing and cloud computing are complementary. Edge devices (sensors, actuators, cameras), gateways, and edge servers process data near its source to reduce latency and bandwidth needs, while the cloud handles scalable analytics and long-term storage. A typical pattern is to perform time-sensitive processing at the edge and send aggregated data to the cloud for deeper analysis. Use cases include Industrial IoT with predictive maintenance, smart manufacturing, autonomous vehicles, healthcare monitoring, retail analytics, and edge-enabled content delivery. This approach relies on edge devices for local inference and data reduction, with cloud orchestration for scalability and global management.

| Aspect | Key Points | Notes / Examples |

|---|---|---|

| Definition | Edge computing pushes data processing closer to the data source (sensors, devices, gateways) instead of sending everything to a centralized cloud. | Leads to lower latency, potential bandwidth savings, and improved privacy by keeping data nearer to origin. |

| Core Mechanism | Compute, storage, and intelligence are brought near data generation; edge nodes process, filter, or act locally. | Edge devices, gateways, and on-site servers perform local processing; the cloud handles heavy lifting and orchestration. |

| Benefits: Latency | Significantly reduces response times for time-sensitive tasks. | Used in industrial automation, robotics, and real-time control. |

| Benefits: Bandwidth & Costs | Local filtering/aggregation reduces data sent to the cloud. | Lowers network load and operating costs by transmitting only essential information. |

| Benefits: Privacy & Security | Data can stay within local networks, reducing exposure and aiding compliance. | Supports data residency and regulatory requirements; reduces risk from external threats. |

| Resilience & Uptime | Local processing can continue during connectivity interruptions. | Critical services remain available even with unstable cloud access. |

| Use Cases (Where It Shines) | Industrial IoT, smart manufacturing, autonomous systems, healthcare, retail, etc. | Low-latency analytics, offline capability, and localized data prep are typical drivers. |

| Key Components | Edge devices, edge gateways, edge servers, MEC, and orchestration/management tooling | Security, deployment automation, and remote management are essential in real deployments. |

| Edge vs Cloud | Complementary layers; cloud handles heavy processing, global analytics, and central governance. | Hybrid architectures balance local speed with cloud scalability. |

| Architectural Patterns | Edge-native analytics, tiered data processing, fog computing, federated/on-device AI | Guides design decisions for distribution of workloads between edge and cloud. |

| Security & Implementation | Multi-layer security: secure boot, hardware roots of trust, encryption, access control, and zero-trust for edge services. | Ongoing updates, anomaly detection, and tamper-evident logging are critical. |

Summary

Edge computing is a transformative paradigm that enables real-time decision-making by processing data close to the source. It reduces latency, conserves bandwidth, enhances privacy, and supports offline operation, which is critical for IoT, autonomous systems, and mobile applications. When combined with cloud computing, edge and cloud form a hybrid model that balances fast local processing with scalable analytics and centralized governance. Organizations should start with a pilot use case at the edge, identify latency and data requirements, select appropriate edge devices and gateways, and implement robust security and management practices. Looking ahead, edge computing will be strengthened by AI at the edge, MEC, 5G integration, and standardization, driving resilient, intelligent systems that operate at the speed of business.